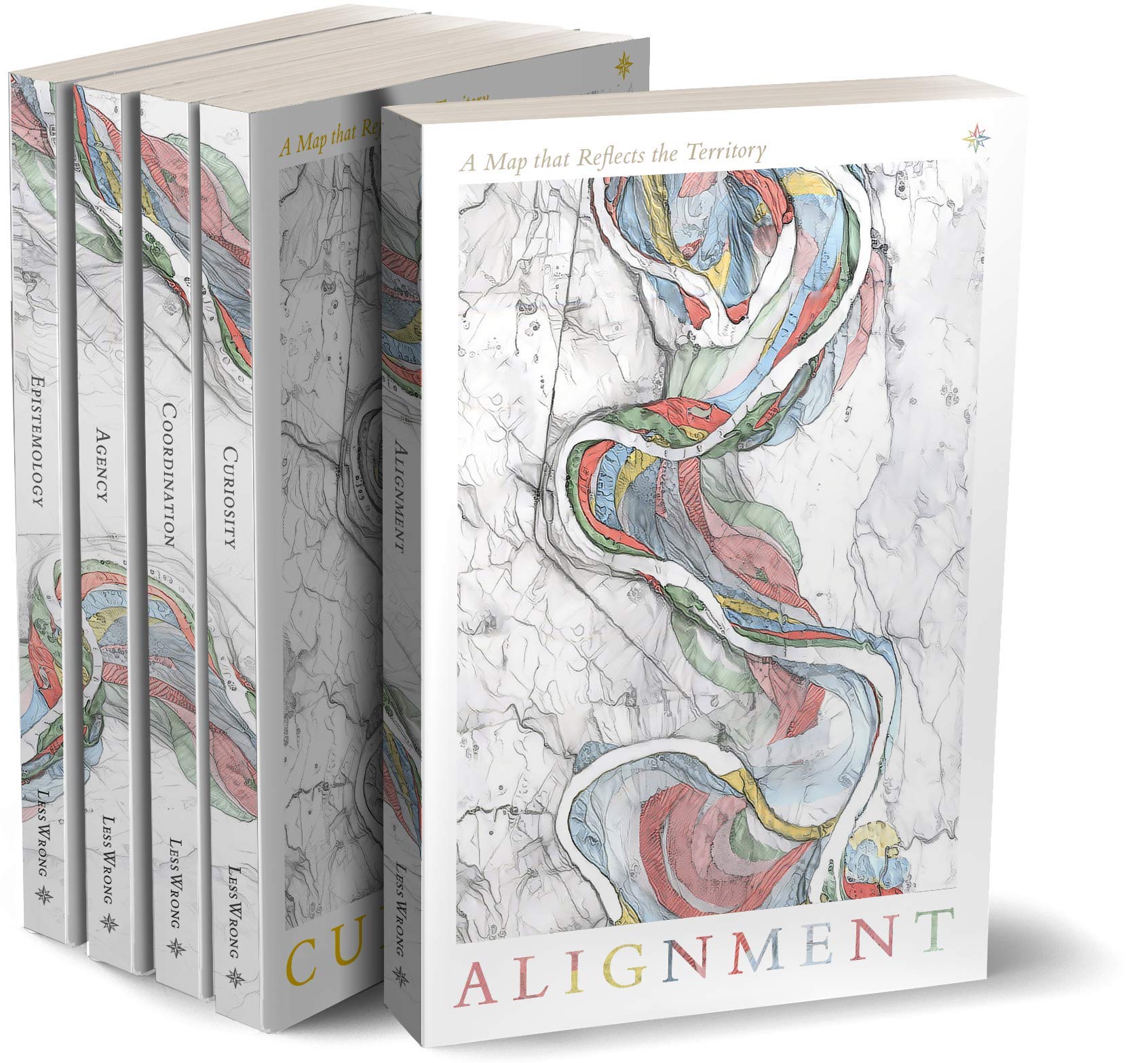

This is a collection of our best essays from 2018, as determined by our 2018 Review. It contains over 40 redesigned graphs, packaged into a beautiful set of 5 books with each book small enough to fit in your pocket. Featuring essays from 24 authors including Eliezer Yudkowsky, Scott Alexander and Wei Dai, as well as comment sections and shorter interludes from even more. This book set is one of the best introductions to the latest thinking of the rationality community. It is a whirlwind tour through topics ranging from art to AI, introspection to integrity, software to social science, and beyond. Overall, A Map that Reflects the Territory promises an intellectual adventure that is both inviting and challenging, for anyone curious about the art of thinking clearly. They can be appreciated without any previous LessWrong experience.

Authors

Librarian Note: There is more than one author by this name in the Goodreads data base. This one is set up as Scott^^^Alexander Scott Alexander is a pen name used by a blogger on The Slate Star Codex, Astral Codex Ten and other sites. Please note: Blog posts are not considered valid published e-books to be listed on Goodreads.

From Wikipedia: Eliezer Shlomo Yudkowsky is an American artificial intelligence researcher concerned with the singularity and an advocate of friendly artificial intelligence, living in Redwood City, California. Yudkowsky did not attend high school and is an autodidact with no formal education in artificial intelligence. He co-founded the nonprofit Singularity Institute for Artificial Intelligence (SIAI) in 2000 and continues to be employed as a full-time Research Fellow there. Yudkowsky's research focuses on Artificial Intelligence theory for self-understanding, self-modification, and recursive self-improvement (seed AI); and also on artificial-intelligence architectures and decision theories for stably benevolent motivational structures (Friendly AI, and Coherent Extrapolated Volition in particular). Apart from his research work, Yudkowsky has written explanations of various philosophical topics in non-academic language, particularly on rationality, such as "An Intuitive Explanation of Bayes' Theorem". Yudkowsky was, along with Robin Hanson, one of the principal contributors to the blog Overcoming Bias sponsored by the Future of Humanity Institute of Oxford University. In early 2009, he helped to found Less Wrong, a "community blog devoted to refining the art of human rationality". The Sequences on Less Wrong, comprising over two years of blog posts on epistemology, Artificial Intelligence, and metaethics, form the single largest bulk of Yudkowsky's writing.